Hey Microsoft, the Internet Made My Bot Racist, Too

It all happened so quickly! First, Microsoft reveals an amazing new bot that learns from you, the public! A sophisticated, deep learning twitter bot. Natural Language Processing From The Future. Then, in less than 24 hours, the bot turns incredibly racist.

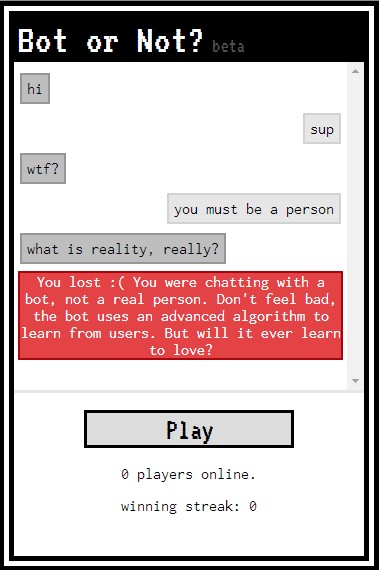

What does this say about Microsoft, and about AI? Well, I have some insight into that, because in May of 2014, the exact same thing happened to me with a bot I made. My bot wasn’t a tweeter, instead it was a Turing test like game called Bot Or Not? where players were either paired with one another, or both paired with a bot, and players had to guess if they were talking with a their live partner or the bot.

My algorithm was simple: output the response to the historical prompt most similar to the current prompt. I wrote the game over the course of a few weekends, then posted it to the subreddit /r/WebGames, and actually people loved it. Success! Over the course of the next two days, it got over 2000 unique users generating over 200,000 chat messages, and was briefly the #1 web game on reddit. After the excitement died down, I was testing it myself, basking in my victory. Here’s how that went down:

me: Hi!

Bot: n***er.

I was stunned. My precious take on the Turing test had become super racist. Early in development I had thought: so what if people curse at my bot? Sure, it will learn to curse too, but that should only make it more realistic and fun. But racism is a showstopper. My solution was quick and easy: I cleaned the database of the offending language, and scripted all future messages which contained the n-word or permutations of it to instead display as “I’m a racist loser.” But still, I was shocked at how quickly it had degenerated.

So, how did this happen? Did I program racism into my bot? Of course not. Both Microsoft’s bot and my bot used an algorithm that works in roughly the same way: it learns from all interactions it has with the public. I found, as I looked through the data and examined the comment threads, that people quickly realized that their messages would be regurgitated by the bot. Then, a handful of people spammed the bot with *tons *of racist messages. Although the number of offenders was small, they were an extremely dedicated bunch, and it was enough to contaminate the entire dataset. Here are some features, shared by both my bot and Microsoft’s, that I believe led to this phenomenon:

- Anonymity. My game was fully anonymous, and Microsoft’s bot could be direct messaged. Trolls showcase their racism when they don’t have their name attached to the comments.

- No filtering. Microsoft would never let people post anonymously to their homepage without editors approving it, yet when you have an algorithm that regurgitates comments from the public, that’s exactly what you’re doing. You’re taking someone else’s thoughts and, without review, putting your name on it. Not a good policy.

Racist trolls quickly figure out both (1) and (2), and see an opportunity to put their psychopathy on an international stage without repercussions.

So, what does this say about Microsoft, and AI? Well, it certainly does not *mean *that Microsoft is racist. But that doesn’t mean they shouldn’t have known better. They committed a small offense, and a big one. The small offense was simply not seeing this coming. Someone should have realized points (1) and (2) created a recipe for trolling, and thought to put in at least minimal safeguards, like the keyword blocking I implemented. I also didn’t see it coming (but then again, I was one guy working on weekends).

And they also committed a bigger offense: I believe that Microsoft, and the rest of the machine learning community, has become so swept up in the power and magic of data that they forget that data still comes from the deeply flawed world we live in.

All machine learning algorithms strive to exaggerate and perpetuate the past. That is, after all, what they are learning from. The fundamental assumption of every machine learning algorithm is that the past is correct, and anything coming in the future will be, and *should *be, like the past. This is a fine assumption to make when you are Netflix trying to predict what movie you’ll like, but is immoral when applied to many other situations. For bots like mine and Microsoft’s, built for entertainment purposes, it can lead to embarrassment. But AI has started to be used in much more meaningful ways: predictive policing in Chicago, for example, has already led to widespread accusations of racial profiling.

This isn’t a little problem. This is a huge problem, and it demands a lot more attention then it’s getting now, particularly in the community of scientists and engineers who design and apply these algorithms. It’s one thing to get cursed out by an AI, but wholly another when one puts you in jail, denies you a mortgage, or decides to audit you. Does that mean we shouldn’t use data, or machine learning?

No, it means we need to think about the datasets we use, how we analyze them, and what applications we use it for. Writing an algorithm that scores a higher accuracy doesn’t always mean progress; in fact sometimes it may mean you have successfully automated oppression.