The Business Analytics Industry Is A Big Dumpster Fire

It’s time to focus on the executive–developer relationship.

Current State of the Business Analytics Industry

Current State of the Business Analytics Industry

I’ve been working with data, data driven applications, and analytics, for over ten years now. I’ve worked at numerous startups, a big consulting firm, the federal government, and as a freelancer. I’ve seen enough to spot one clear trend: the business analytics industry is failing its customers spectacularly, and it’s largely due to an overemphasis on technology and a disregard for the executive–developer relationship.

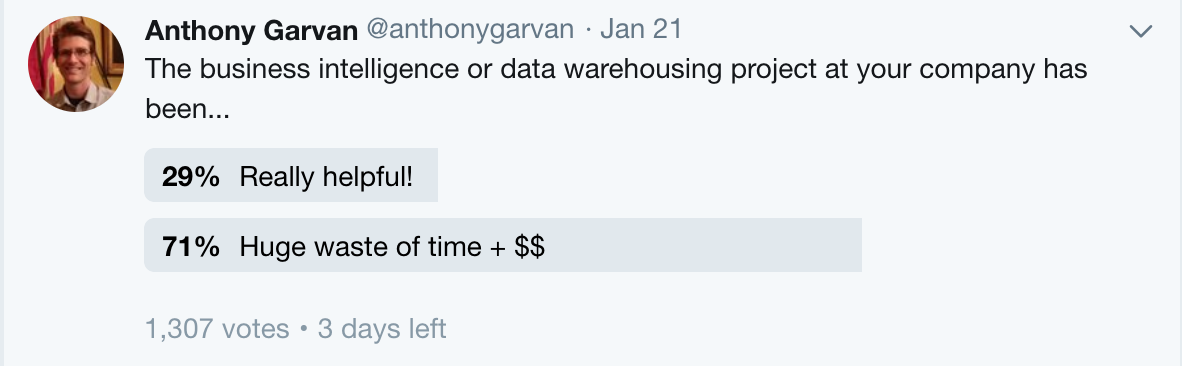

I’ve been wondering lately: Is it just me? Are things like expensive BI tools and data warehouses really great, and I’ve been in weird situations where they didn’t work out? So I ran a quick Twitter poll targeting business executives, and the results could not have surprised me less: over seven out of ten executives responded that the BI or Data Warehouse projects in their company were a “huge waste of time + $$”.

Twitter polls are far from scientific, but it’s clear that some of the most established products of the business analytics industry are big losers from the customer perspective. I would be shocked if newer “Big Data” projects fared any better.

Twitter polls are far from scientific, but it’s clear that some of the most established products of the business analytics industry are big losers from the customer perspective. I would be shocked if newer “Big Data” projects fared any better.

What if the auto industry tried this business model? “Here’s your car, there’s a 70% chance it won’t work!” Yet this state of the art for multi-million dollar projects is somehow acceptable? It certainly isn’t, and it’s time to start thinking deeply about why this failure persists over numerous “innovative” technology cycles.

Despite decades of tremendous improvements in computer hardware and software, things are still terrible out there for executives who just want to understand their business and operations. Business analytics is old — the term was coined in 1958, even before the invention of the integrated circuit. And it’s big — estimated to be nearly $68B by 2019. Shouldn’t we be good at this by now? Why is this industry failing so miserably?

I’d be interested in hearing your ideas. Here’s my pet theory: failed analytics efforts cast technology as the hero, which inevitably leads to a search for More Powerful technology, which leads to wildly over-engineered solutions that are difficult to operate and maintain. The assumption is that the technology is the thing that’s providing the value, with developers more or less keeping it running and executives acting on the spoon-fed conclusions. The successful efforts I’ve been a part of do the precise opposite: they are based on a solid human relationship between an executive and a developer, and the technology only serves as a means to an end. The primary value is a dialog of questions and answers, with tools chosen only insofar as they push that conversation forward.

I’ll give one example from my experience that ended up being a fairly controlled experiment. We wanted to do analytics of Twitter activity from an annual conference. The previous year, the analysis had been done in Hadoop and Hive (popular “Big Data” technologies) by a team of three engineers. The business folks had no idea what they wanted to do prior: thus, the engineers and technical manager had chosen the Ultimately Powerful Technology. But the tech was difficult to use and very complex to setup and configure. It took three months after the conference ended to deliver the results and by that time nobody cared.

This year, two weeks ahead of time, a savvy manager got me a nice infographic and made the goal clear: “We want this, but with real numbers.” We talked it out over the phone. What exactly does this number mean? How is this counted? It turned out that the data wasn’t Big at all, the requirements were really straightforward, and the week prior I wrote a python script that loaded tweets into a SQLite database (the lightweight database that comes with python), and ran about ten queries. The manager had her numbers as soon as the conference finished and the infographic was published to customers within days.

With the tech-centric approach, a lack of communication and clarity begat complex technology and infrastructure, making it extremely difficult to make any progress on what ended up being simple requirements. In the human-centric approach, a little planning and conversation made it clear we didn’t need fancy technology, and could deliver what we needed in real time.

So, here’s my advice for developers: get attuned to the problems facing your business. Make sure you’re solid on programming fundamentals. Learn about what data your organization is creating and brainstorm resourceful ways to get the answers to the most urgent questions. Be curious. Focus on the user (the consumer of the results), what they need, and how they want to be communicated with. Don’t build an Apache zoo if a python script will do. Don’t build a dashboard if they want a phone call. It’s your job to be listening at the right moment and say, “That’s easy, I can answer that.” And to follow through.

And here’s my advice for executives: don’t buy more business analytics mega-products. Focus on defining the problems, prioritizing them, communicating them effectively. If you ask for everything, you’ll likely get nothing. Get developers in the room and talk with the people who will actually be writing the code, not their manager or manager’s manager. It is that human, reciprocal relationship that forms the basis of any value you will be able to create together.